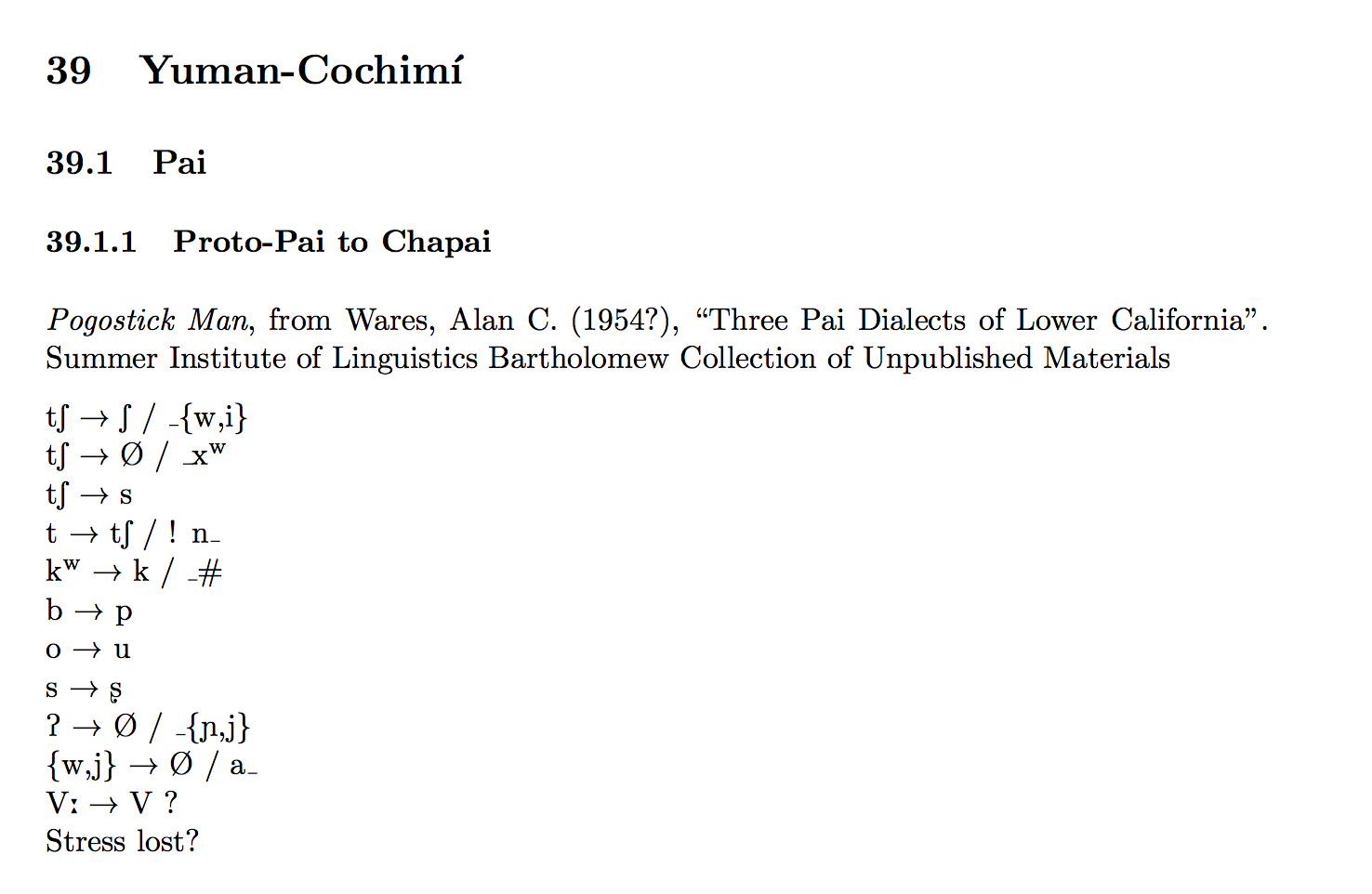

Family

Yuman-Cochimí

Subfamily

Pai

Parent

Proto-Pai

Child

Chapai

Contributor

Pogostick Man

Reference

Wares, Alan C. (1954?) “Three Pai Dialects of Lower California”. Summer Institute of Linguistics Collection of Unpublished Materials.

Changeset

tʃ → ʃ / _{w,i}

tʃ → ∅ / _xʷ

tʃ → s /

t → tʃ / ! n_

kʷ → k / _#

b → p

o → u

s → ʂ

ʔ → ∅ / _{ɲ,j}

{w,j} → ∅ / a_

Vː → V ?

Stress lost?

But of course, the thing we really want to model here are the changes themselves. It’s pretty interesting to note that user Pogostick Man has transcribed these changes into IPA, from the original notation.

A key question is to what degree the whole Index Diachronica has been transliterated into IPA. We’ll have to do some analysis to determine if that’s the case.

What’s in a Change?

So, the heart of the matter: what is the actual data contained in a diachronic change? Let’s take a simple one:

kʷ → k / _#

This can be read:

Proto-Pai /kʷ/ became Chapai /k/ word-finally.

We can think of the data like this:

{

"parent": "Proto-Pai",

"child": "Chapai",

"before": "kʷ",

"after": "k",

"environment": "_#"

}

So this is a little more structured — it captures the basic information in the prose description above. Let’s try another:

Proto-Pai /b/ became Chapai /p/.

{

"parent": "Proto-Pai",

"child": "Chapai",

"before": "b",

"after": "p",

"environment": null

}

Here we don’t even need to mention the environment, because apparently this rule applied across the board.

We can actually untangle some of the more complicated-looking rules by just viewing them as abbreviations for multiple individual rules. So, the rule:

tʃ → ∅ / _{w,i}

Can actually be interpreted as two rules:

tʃ → ∅ / _w

tʃ → ∅ / _i

Which boil down to:

{

"parent": "Proto-Pai",

"child": "Chapai",

"before": "tʃ",

"after": "∅",

"environment": "_i"

}

…and…

{

"parent": "Proto-Pai",

"child": "Chapai",

"before": "tʃ",

"after": "∅",

"environment": "_w"

}

These representations are valid JSON. You might be wondering why we should want to convert rules into JSON — the answer is, then the rules are data, and we can do all kinds of neat things with them. For one thing, we can render them in whatever format we want.

But cooler than that, once we have some rules in place, and we have some text in any language in IPA, we can run the rules on text. How cool would it be to run a Chapai changeset on… say, Irathient, just because?

Try deleting the “t” in in “tʃ” the box above. You’ll see that the

rendered rule on the right changes (to ʃ → ∅ / _w), because a little

Javascript program is watching that box, interpreting the data, and templating

out a familiar representation of that data. This kind of thing is just the tip

of the iceberg of what we can do with a full database model of the changes in

the Index Diachronica.

From Changes to Changeset

So just to wrap up this little intro, I suggest representing this whole changeset (ordered list of changes) for Proto-Pai to Chapai in the following way:

{

"metadata": {

"parent": "Proto-Pai",

"child": "Chapai",

"contributor": "Pogostick Man",

"source": "Wares, Alan C. (1954?) “Three Pai Dialects of Lower California”. Summer Institute of Linguistics Collection of Unpublished Materials.",

"family": "Yuman-Cochimí",

"subfamily": "Pai",

"notes": [

"The last rule, “Stress lost?” should probably checked in the original source."

]

},

"changeset": [

{

"before": "tʃ",

"after": "ʃ",

"environment": "_w"

},

{

"before": "tʃ",

"after": "ʃ",

"environment": "_i"

},

{

"before": "tʃ",

"after": "∅",

"environment": "_xʷ"

},

{

"before": "tʃ",

"after": "s",

"environment": null

},

{

"before": "t",

"after": "tʃ",

"environment": "! n_"

},

{

"before": "kʷ",

"after": "k",

"environment": "_#"

},

{

"before": "b",

"after": "p",

"environment": null

},

{

"before": "o",

"after": "u",

"environment": null

},

{

"before": "s",

"after": "ʂ",

"environment": null

},

{

"before": "ʔ",

"after": "∅",

"environment": "_j"

},

{

"before": "ʔ",

"after": "∅",

"environment": "_ɲ"

},

{

"before": "w",

"after": "∅",

"environment": "a_"

},

{

"before": "j",

"after": "∅",

"environment": "a_"

},

{

"before": "Vː",

"after": "V",

"environment": null

}

]

}

I left out the last rule, because it’s kind of unclear what it means. I’ve added a note in the metadata to this effect. The idea is that someone who comes along and wants to check this file could go off and verify that rule in the original source.

There are many details left out here, we’ll continue to work through them as we continue to work on the project.

Please note — we’re not going to write out all these data files by hand! We’ll take several steps to get the database bootstrapped. We’ll extract as much data automatically as we can, and then we’ll build a simple user interface to allow contributors to check and update the information. More soon.